Meta introduced new safety features for teen accounts on Instagram on Wednesday. The Menlo Park-based social media giant said it is expanding its Teen Account protection and safety features to offer more tools to teenagers while they exchange messages with other users via the platform’s direct messaging (DM) feature. With this, teenagers will be able to see when another user joined the platform, as well as a series of safety tips they should remember while talking to strangers.

In a newsroom post, Meta said the new features are part of the company’s ongoing efforts to protect young people from direct and indirect harm, and to create an age-appropriate experience for them. The social media giant also highlighted that existing safety features such as Safety Notices, Location Notice, and Nudity Protection have helped millions of teenagers avoid harmful experiences on the platform.

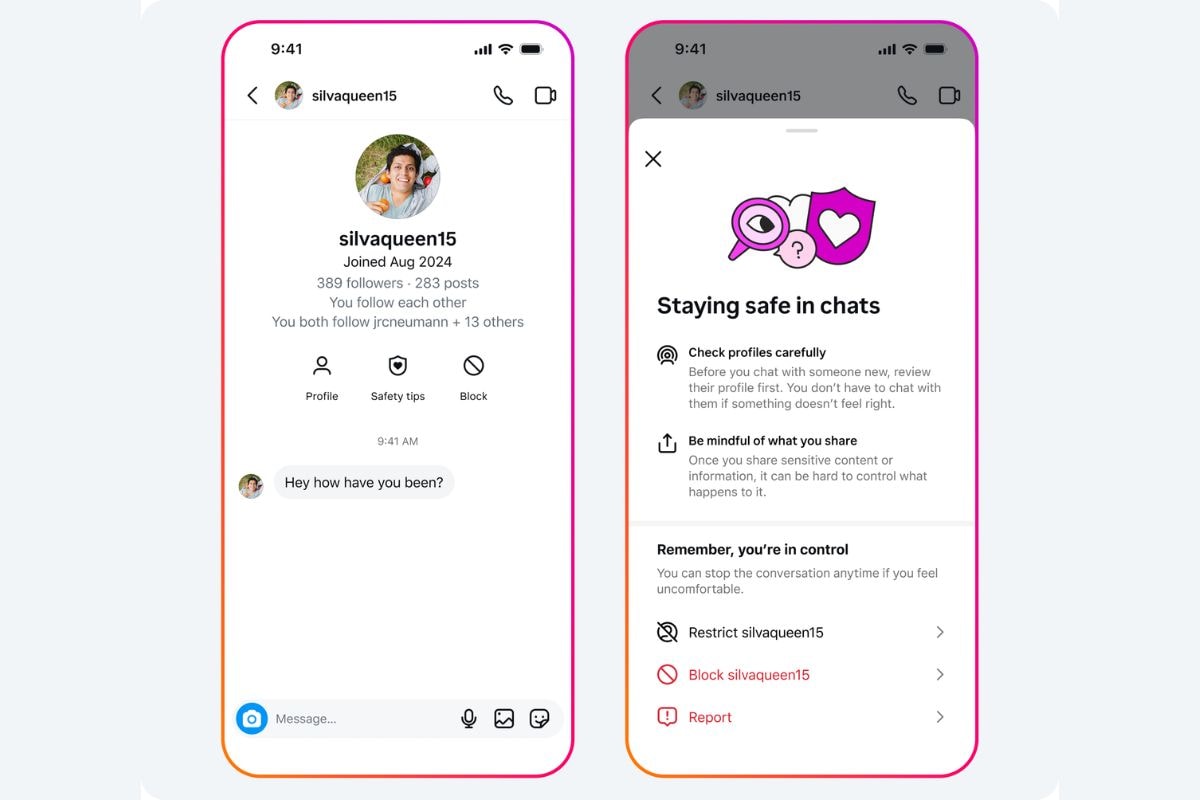

Both of these new safety features are available in Instagram DMs. The first one will show teenagers safety tips when they are about to text another user, even if they both follow each other. These tips ask the teenager to check the other person’s profile carefully, and that they “don’t have to chat with them if something doesn’t feel right.” It also reminds underage users to be mindful of what they share with others.

When sending a DM to another user for the first time, teenagers will see the month and the year that account joined Instagram at the top of the chat interface. Meta says this will help users with more context about the accounts they’re messaging and let them easily spot potential scammers.

The second feature appears when a teenager attempts to block another user from the DMs. The bottom sheet will now show a “Block and report” option, allowing them to both block the account and report the incident to Meta. The company says the combined button will let teenagers end the interaction and inform the company with a few clicks, instead of doing it separately.

Instagram’s new combined “Block and report” button

Photo Credit: Meta

Meta is also expanding the purview of safety features for Teen Accounts to accounts run by adults that primarily feature children. These are the accounts where the profile picture is of a child, and adults regularly share photos and videos of their children. Typically, these accounts are managed by parents or child talent managers who run these accounts on behalf of children under the age of 13.

Notably, while Meta allows adults to run accounts representing children as long as they mention that in their bio, accounts run by the child themselves are deleted.

The company said these adult-managed accounts will now see some of the protections which are available to Teen Accounts. These include being placed in Instagram’s strictest message settings to prevent unwanted messages and turning on Hidden Words to filter offensive comments. Instagram will start showing these accounts a notification at the top of their feed to let them know that their safety settings have been updated.

.jpg)